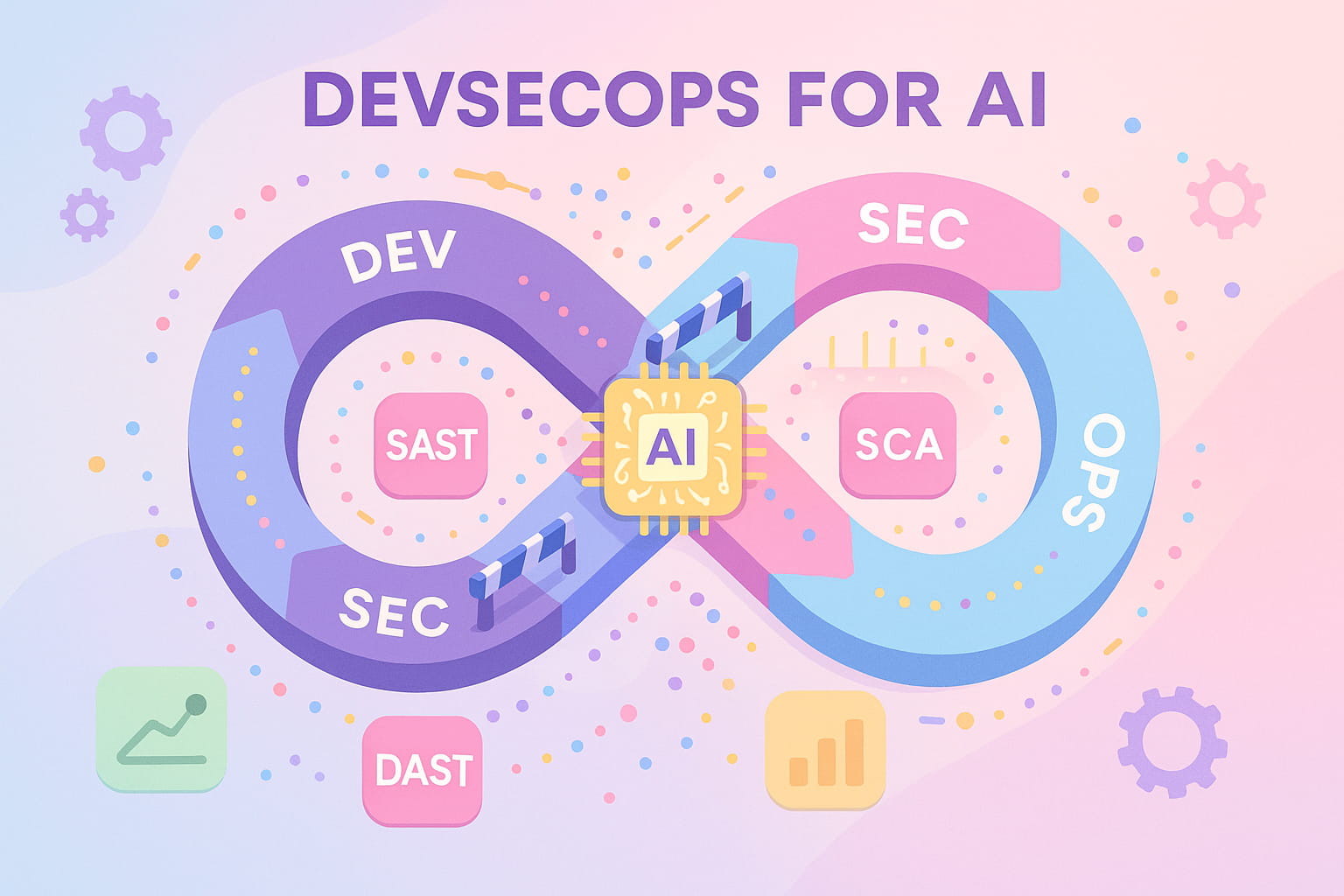

The DevSecOps Evolution for AI

The integration of AI code generation into modern development workflows represents a seismic shift in how we approach application security. Traditional DevSecOps practices, designed for human-written code with predictable patterns and relatively slow change rates, struggle to keep pace with the velocity and volume of AI-generated code.

The velocity of AI-generated code demands a fundamental evolution of DevSecOps. Traditional security testing designed for human-written code fails to catch 45% of LLM-specific vulnerabilities. This guide shows you how to adapt your pipeline for the AI era.

Critical Shift: Security can no longer be a gate—it must be continuous and real-time. LLMs can generate vulnerabilities with every keystroke, requiring security testing to match this pace.

This comprehensive guide walks you through the practical implementation of security testing specifically tailored for AI-generated code. You'll learn how to configure SAST, DAST, IAST, and SCA tools with real-world examples that work in production environments today.

For foundational AI security concepts, see our Complete Guide to Securing LLM-Generated Code.

Unique Challenges of AI-Generated Code

Before diving into solutions, it's crucial to understand why AI-generated code presents unique security challenges that break traditional testing approaches. These challenges stem from fundamental differences in how AI models generate code compared to human developers.

| Challenge | Traditional Approach | Why It Fails for AI | Required Adaptation |

|---|---|---|---|

| Volume | Scan on commit | 100x more code generated | Real-time IDE scanning |

| Velocity | Nightly scans | Code changes every minute | Continuous scanning |

| Patterns | Human anti-patterns | LLMs have unique failure modes | AI-specific rules |

| Context | Code-only analysis | Intent lost in generation | Prompt + code analysis |

| Trust | Developer accountability | "Vibe coding" without understanding | Mandatory verification |

Each of these challenges requires specific tooling adaptations and process changes. Let's explore how to configure your security testing stack to address them effectively.

SAST: Static Analysis for AI Code

Static Application Security Testing (SAST) serves as your first line of defense against vulnerabilities in AI-generated code. However, traditional SAST tools often produce an overwhelming number of false positives when analyzing AI output, as they weren't designed to recognize LLM-specific patterns.

The key to effective SAST for AI code lies in developing custom rules that target the specific anti-patterns LLMs tend to produce. Research shows that LLMs consistently generate certain types of vulnerable code patterns that differ from typical human mistakes.

Configuration and Rules

Creating AI-specific SAST rules requires understanding common LLM failure modes. Here's a comprehensive rule set that catches the most frequent AI-generated vulnerabilities:

Custom Rule Development

These rules specifically target patterns that appear frequently in AI-generated code but rarely in human-written code. The metadata fields help prioritize findings based on the likelihood of occurrence in AI output.

IDE Integration Configuration

Real-time scanning in the IDE is essential for catching vulnerabilities as the AI generates them. This configuration enables immediate feedback, preventing vulnerable code from ever being committed:

The key settings here include scanOnType which triggers analysis as code is generated, andblockCriticalVulnerabilities which can prevent AI assistants from inserting known vulnerable patterns.

AI-Enhanced SAST Tools

Next-generation SAST tools use machine learning to understand context and dramatically reduce false positives. They can differentiate between AI-generated and human-written code, applying different analysis strategies to each.

Traditional SAST Output:

AI-Enhanced SAST Output:

DAST: Dynamic Testing for LLM Applications

Dynamic Application Security Testing (DAST) must evolve beyond traditional web application scanning to effectively test AI-powered applications. The challenge lies in testing not just the application's traditional endpoints, but also the LLM integration points that introduce new attack vectors.

API-First Testing

Modern LLM applications are predominantly API-driven, requiring a shift from traditional web crawling to API-focused testing strategies. This approach ensures comprehensive coverage of all endpoints that process AI-generated content:

This configuration generates specialized payloads for testing LLM-specific vulnerabilities like prompt injection alongside traditional security issues. The dual approach ensures comprehensive coverage.

LLM Endpoint Fuzzing

Fuzzing LLM endpoints requires sophisticated techniques that go beyond traditional input mutation. AI models can be vulnerable to subtle manipulations that wouldn't affect traditional applications:

These fuzzing strategies target specific weaknesses in LLM processing. Unicode injection can bypass input filters, while context overflow attempts to manipulate the model's attention mechanism. Each technique requires careful monitoring to detect successful exploits.

IAST: Runtime Verification

Interactive Application Security Testing (IAST) provides the most accurate vulnerability detection for AI-generated code by observing actual runtime behavior. This is particularly valuable for AI code where static analysis might miss context-dependent vulnerabilities.

IAST instruments your application to track data flow from input to output, identifying vulnerabilities as they occur in real execution paths. For AI applications, this means tracking how LLM outputs flow through your application and whether they're properly validated before use.

IAST Configuration for AI Code

This configuration adds AI-specific monitoring hooks that track LLM interactions. TheaiContext flag ensures that prompts are captured alongside generated code, providing crucial context for understanding vulnerability origins.

The real power of IAST for AI code comes from its ability to detect vulnerabilities that only manifest during runtime. For example, an LLM might generate code that seems safe in isolation but becomes vulnerable when combined with specific application state or user input patterns.

SCA: Managing AI Supply Chain Risk

Software Composition Analysis (SCA) becomes critical when AI assistants suggest dependencies. LLMs often recommend outdated, vulnerable, or even malicious packages because their training data includes code from various time periods and they lack real-time knowledge of security advisories.

Enhanced SCA Configuration

This configuration adds AI-specific checks that traditional SCA tools miss. The reachability analysis is particularly important—it determines whether vulnerable code in a dependency is actually executed, helping prioritize remediation efforts.

Dependency Policy for AI Development

Establishing strict policies for AI-suggested dependencies prevents supply chain attacks and reduces the maintenance burden of managing vulnerable packages:

The ai_suggestions section is crucial—it prevents automatic installation of AI-recommended packages, ensuring human review of all new dependencies. This policy has prevented numerous security incidents in organizations that adopted it early.

Complete Pipeline Integration

Integrating all security testing tools into a unified CI/CD pipeline creates a comprehensive defense system for AI-generated code. The key is orchestrating tools to work together, sharing context and reducing duplicate findings.

Here's a production-ready pipeline that combines all security testing approaches:

This pipeline implements defense in depth, with multiple scanning stages that catch different types of vulnerabilities. The AI detection step is crucial—it identifies which code was likely generated by AI, allowing for targeted security analysis.

Metrics and Monitoring

Measuring the effectiveness of your DevSecOps pipeline for AI code requires new metrics that capture the unique challenges of AI-generated vulnerabilities. Traditional metrics like code coverage aren't sufficient when dealing with the volume and velocity of AI code generation.

| Metric | Target | Measurement | Action if Below |

|---|---|---|---|

| Mean Time to Detect (MTTD) | < 5 minutes | Time from code generation to vulnerability detection | Increase scanning frequency |

| False Positive Rate | < 15% | Invalid findings / Total findings | Tune detection rules |

| AI Code Coverage | > 95% | AI-generated code scanned / Total AI code | Improve detection methods |

| Remediation Time | < 24 hours | Time from detection to fix | Automate fixes |

| Escape Rate | < 1% | Production vulnerabilities / Total detected | Add security gates |

Dashboard Configuration

Real-time visibility into your AI code security posture requires specialized dashboards that track AI-specific metrics alongside traditional security KPIs:

These metrics provide actionable insights into your security posture. The vulnerability density metric is particularly useful for identifying AI models or prompts that consistently generate insecure code, allowing for targeted improvements.

Best Practices and Recommendations

Successfully securing AI-generated code requires more than just tools—it demands a cultural shift in how development teams approach security. Here are proven practices from organizations that have successfully adapted their DevSecOps for AI:

- Shift Security Left and Make It Continuous

Security scanning must happen in real-time as code is generated, not hours or days later. Configure IDE plugins to scan on every keystroke and provide immediate feedback.

- Track Prompt-to-Code Lineage

Always maintain the connection between prompts and generated code. This context is invaluable for understanding why certain vulnerabilities appear and how to prevent them.

- Implement Progressive Security Gates

Use risk-based gating that becomes stricter as code moves toward production. Allow experimental AI code in development but require thorough validation before deployment.

- Automate Everything Possible

Manual security review cannot scale with AI code generation velocity. Automate scanning, remediation, and even approval workflows for low-risk changes.

- Measure and Optimize Continuously

Track metrics religiously and use them to optimize your pipeline. Every percentage point improvement in detection accuracy or reduction in false positives compounds over time.

Key Takeaways

Remember:

- • Speed is critical: Security must match the velocity of AI code generation

- • Context matters: Track prompts alongside code for better analysis

- • Automation is essential: Manual review can't scale with AI volume

- • Metrics drive improvement: Measure everything to optimize the pipeline

- • Integration is key: Security tools must work together seamlessly

The evolution of DevSecOps for AI is not a one-time transformation but an ongoing journey. As AI models become more sophisticated and generate increasingly complex code, our security practices must evolve in parallel. The organizations that succeed will be those that embrace continuous adaptation and learning.

Next Steps

Ready to implement these practices in your organization? Explore these related guides for deeper dives into specific aspects of AI code security: