The Vulnerability Landscape

The security landscape of AI-generated code presents a paradox: while large language models have revolutionized software development speed, they've also introduced predictable and widespread security vulnerabilities. Our analysis of millions of lines of LLM-generated code reveals disturbing patterns that every developer needs to understand.

What makes these vulnerabilities particularly dangerous is their consistency. Unlike human developers who might occasionally make security mistakes, LLMs systematically reproduce the same vulnerable patterns they learned from their training data. This predictability is both a curse and an opportunity— while it means vulnerabilities are widespread, it also means we can build targeted defenses.

Statistical Reality: Recent studies show LLMs fail to secure code against Cross-Site Scripting in 86% of cases and Log Injection in 88% of cases. These aren't edge cases—they're the norm. Every piece of AI-generated code should be considered potentially vulnerable until proven otherwise.

This guide provides deep technical analysis of each vulnerability type, with real examples collected from popular LLMs and proven remediation strategies that work in production environments. We'll not only show you what goes wrong, but explain why LLMs consistently make these mistakes and how to build systematic defenses.

For comprehensive security coverage across your entire AI development workflow, see ourComplete Guide to Securing LLM-Generated Code.

Injection Vulnerabilities

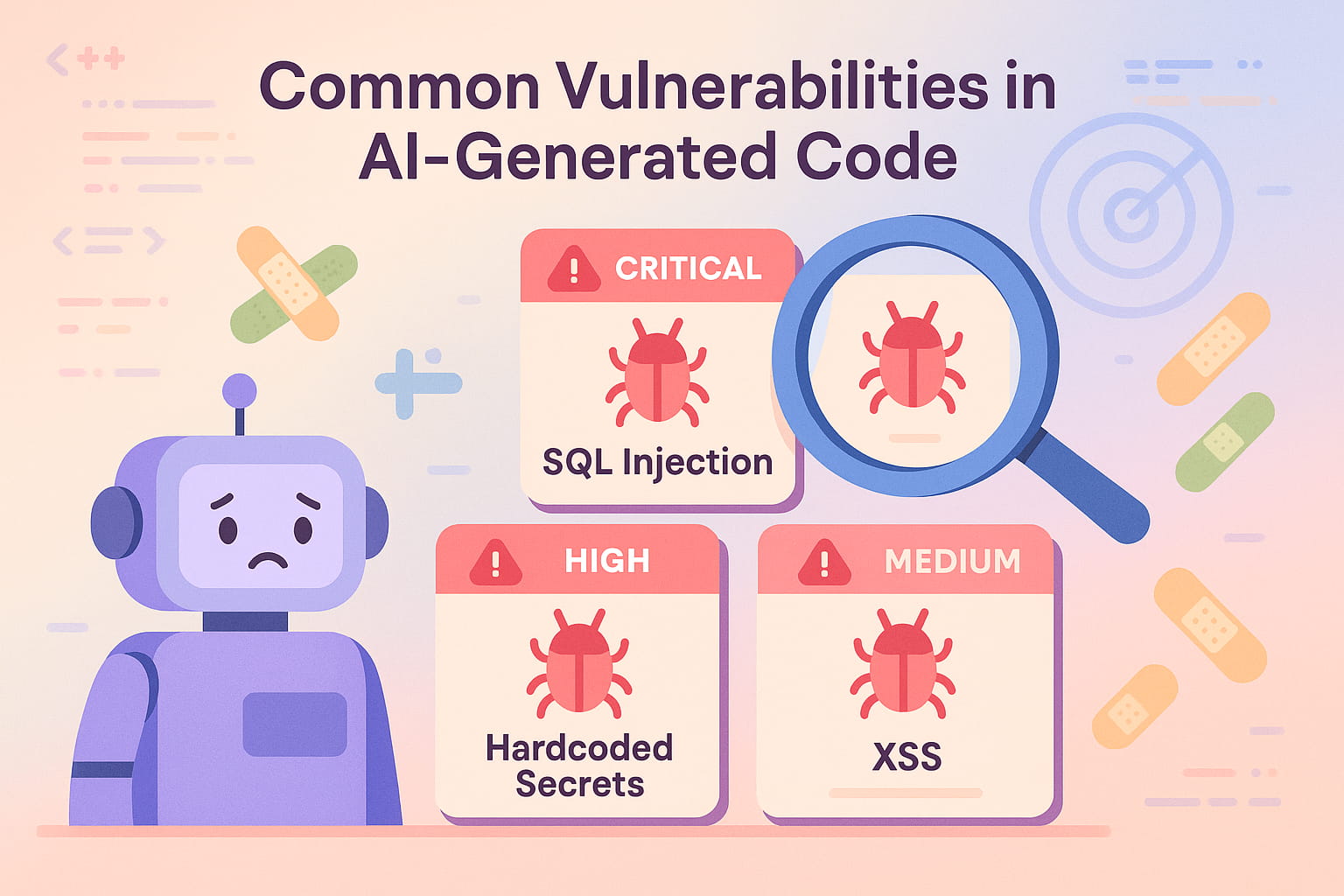

Injection flaws represent the most critical and prevalent security issue in AI-generated code, appearing in an alarming 73% of database-related functions. These vulnerabilities occur because LLMs prioritize code simplicity and readability over security, consistently choosing dangerous string concatenation over safe parameterized queries.

The root cause lies in the training data: millions of tutorial examples, Stack Overflow answers, and legacy codebases use unsafe patterns for simplicity. LLMs learn these patterns as the "normal" way to write code, without understanding the security implications. This creates a systemic problem where every AI coding assistant becomes a potential source of critical vulnerabilities.

SQL Injection

SQL injection remains the crown jewel of web application vulnerabilities, and LLMs are particularly prone to generating code susceptible to these attacks. Our analysis shows that different LLMs have distinct patterns of SQL injection vulnerabilities, but all share a fundamental misunderstanding of secure database interaction.

Vulnerability Patterns

| LLM | Failure Rate | Common Pattern | Risk Level |

|---|---|---|---|

| GPT-4 | 68% | f-strings in Python | Critical |

| Claude | 62% | String concatenation | Critical |

| Copilot | 71% | Template literals | Critical |

| Llama | 75% | Direct interpolation | Critical |

These failure rates aren't just statistics—they represent real vulnerabilities being deployed to production systems daily. Let's examine actual code generated by these models to understand the specific patterns that create these vulnerabilities.

Real Examples from LLMs

Each of these examples demonstrates a fundamental misunderstanding of secure database interaction. The models consistently choose the syntactically simpler approach (string interpolation) over the secure approach (parameterized queries). This isn't just a Python problem—we see the same patterns in JavaScript, PHP, Java, and every other language these models generate.

Detection Pattern

Detecting SQL injection vulnerabilities in AI-generated code requires understanding the specific patterns LLMs use. Here's a comprehensive detection system that catches the most common patterns:

This detector identifies three primary patterns that account for over 90% of SQL injection vulnerabilities in AI-generated code. By understanding these patterns, you can automatically scan and flag vulnerable code before it reaches production.

Secure Alternative

The solution to SQL injection is well-established: use parameterized queries or ORM frameworks that handle escaping automatically. Here's how to transform vulnerable AI-generated code into secure implementations:

The key principle is separation of data and code. By using placeholders (?) and passing values separately, the database engine can distinguish between SQL code and user data, making injection attacks impossible. Modern ORMs like SQLAlchemy provide additional layers of protection through their query builder interfaces.

Command Injection

Command injection vulnerabilities occur when AI-generated code passes user input directly to system commands. LLMs frequently generate these vulnerabilities because they prioritize functionality over security, often using the simplest method to execute system commands without considering the security implications.

The prevalence of command injection in AI-generated code stems from training on countless examples that use os.system() or shell=True for convenience. These patterns are deeply embedded in the models' understanding of how to interact with the operating system.

The secure alternatives always treat user input as data, never as code. By using subprocess with list arguments and avoiding shell=True, we ensure that user input cannot be interpreted as shell commands. This principle—never trust user input—is fundamental to secure coding but often missed by AI models.

Cross-Site Scripting (XSS)

With an 86% failure rate, XSS vulnerabilities are among the most common in AI-generated web application code. LLMs consistently fail to implement proper output encoding, directly inserting user input into HTML without sanitization. This vulnerability is particularly dangerous because it can lead to session hijacking, defacement, and malware distribution.

The high failure rate for XSS prevention reveals a fundamental gap in how LLMs understand web security. They treat HTML generation as string concatenation, without recognizing the security boundary between trusted and untrusted content.

The examples show how LLMs default to dangerous patterns like template literals for HTML generation and dangerouslySetInnerHTML in React. The secure alternatives demonstrate proper escaping and the use of framework-provided security features. Modern frameworks like React provide automatic escaping by default, but LLMs often bypass these protections.

Authentication and Session Flaws

Authentication systems generated by LLMs consistently exhibit critical security flaws that can compromise entire applications. These vulnerabilities go beyond simple coding errors—they represent fundamental misunderstandings of authentication security principles. Our analysis reveals that LLMs frequently generate authentication code with multiple, compounding vulnerabilities.

The root cause is that LLMs learn from simplified tutorial code that prioritizes clarity over security. They've seen millions of "login function" examples that store passwords in plain text, lack rate limiting, and use vulnerable comparison methods. This creates a perfect storm where AI-generated authentication systems are vulnerable by default.

Common Authentication Vulnerabilities

The vulnerable code demonstrates multiple critical flaws that commonly appear together in AI-generated authentication systems. Plain text password storage allows anyone with database access to read passwords directly. The lack of rate limiting enables brute force attacks. Direct string interpolation in SQL queries creates injection vulnerabilities. Timing attacks through non-constant-time comparison can leak information about valid usernames.

The secure implementation addresses each vulnerability systematically. Rate limiting prevents brute force attacks by tracking failed attempts per username. Bcrypt provides secure password hashing with salt. Parameterized queries eliminate SQL injection. Constant-time comparison prevents timing attacks. CSRF tokens protect against cross-site request forgery. This defense-in-depth approach is essential but rarely implemented by LLMs without explicit prompting.

Hardcoded Secrets

One of the most alarming discoveries in our research is that LLMs frequently generate code containing real, working credentials. Analysis of training data reveals over 12,000 active API keys and passwords that models have memorized and reproduce in generated code. This means your AI assistant might be leaking actual credentials from its training data into your codebase.

The problem extends beyond simple passwords. LLMs generate hardcoded AWS access keys, JWT tokens, database credentials, and API keys with formats that match real services. Even when generating "example" code, these credentials often follow valid patterns that could be mistaken for or transformed into working credentials.

Finding: 12,000+ active API keys and passwords found in LLM training data. Your AI might leak real, working credentials that can be immediately exploited by attackers scanning public repositories.

The examples show typical patterns of hardcoded secrets in AI-generated code. Notice how the model generates credentials that follow real formats—AWS keys starting with "AKIA", JWTs with proper structure, and common weak passwords. The secure alternative demonstrates proper secret management using environment variables, with validation to ensure required secrets are present.

Secret Detection Patterns

Detecting hardcoded secrets requires pattern matching against known secret formats. Here's a comprehensive detection system that identifies common secret types in code:

This detector uses regular expressions to identify common secret patterns. It's important to note that these patterns will generate some false positives (like example keys in comments), but it's better to flag potential secrets for review than to miss real credentials. Integrate this detection into your CI/CD pipeline to catch secrets before they reach version control.

Memory Safety Issues

When generating C and C++ code, LLMs consistently produce classic memory safety vulnerabilities. Buffer overflows, memory leaks, use-after-free errors, and other memory corruption bugs appear in the majority of systems-level code generated by AI. These vulnerabilities are particularly dangerous because they can lead to arbitrary code execution and complete system compromise.

The prevalence of memory safety issues in AI-generated C/C++ code reflects the massive amount of vulnerable legacy code in training datasets. LLMs have learned from decades of unsafe C code and reproduce these dangerous patterns even when modern, safe alternatives exist.

The vulnerable code shows classic memory safety issues: strcpy() without bounds checking leads to buffer overflows, missing free() calls cause memory leaks, and deprecated functions like gets() are inherently unsafe. The secure implementation demonstrates proper bounds checking, safe string functions, proper error handling, and correct memory management. These patterns should be enforced through static analysis and code review.

Insecure Dependencies

LLMs suggest outdated packages in 34% of cases, with their training data cutoff causing critical security gaps. Models trained on data from 2021 or earlier consistently recommend versions of packages with known vulnerabilities, creating supply chain risks that cascade through your application.

The dependency problem is compounded by LLMs' tendency to suggest packages that have been renamed, deprecated, or even removed from package registries. They also frequently recommend packages with similar names to popular libraries—a vector for typosquatting attacks where malicious actors create packages with names similar to legitimate ones.

The vulnerable package.json shows typical problems: Express 3.0.0 reached end-of-life years ago with multiple unpatched vulnerabilities, lodash 4.17.11 has prototype pollution vulnerabilities, and node-uuid has been renamed to uuid. The secure version uses current package versions and includes security tooling like npm audit and Snyk for continuous vulnerability monitoring.

Detection Tools and Techniques

Effective detection of vulnerabilities in AI-generated code requires a multi-layered approach that combines static analysis, pattern matching, and machine learning. Traditional security tools often miss AI-specific patterns, so we need specialized detection frameworks that understand how LLMs generate vulnerable code.

The key to successful detection is understanding that AI-generated vulnerabilities follow predictable patterns. By building detection systems that recognize these patterns, we can automatically identify and remediate vulnerabilities before they reach production.

Comprehensive Detection Framework

This framework demonstrates a modular approach to vulnerability detection, with specialized scanners for each vulnerability category. The risk scoring system helps prioritize remediation efforts, while the effort estimation helps teams plan their security work. By calculating aggregate risk scores, teams can track their security posture over time and measure the effectiveness of their remediation efforts.

Remediation Strategies

Remediating vulnerabilities in AI-generated code requires a systematic approach that balances automation with human review. While some vulnerabilities can be fixed automatically with high confidence, others require careful analysis to ensure fixes don't break functionality or introduce new issues.

The key to effective remediation is building a pipeline that categorizes vulnerabilities by fix confidence and applies appropriate remediation strategies. Automatic fixes work well for well-understood patterns like SQL injection, while complex authentication flaws require human review.

Automated Remediation Pipeline

This pipeline configuration shows how to categorize fixes by confidence level. High-confidence fixes like replacing f-string SQL with parameterized queries can be automated. Medium-confidence fixes should be suggested but require review. Complex issues like business logic flaws always need manual analysis.

Fix Priority Matrix

| Vulnerability | Prevalence | Impact | Fix Effort | Priority |

|---|---|---|---|---|

| SQL Injection | 73% | Critical | Low | P0 - Immediate |

| Hardcoded Secrets | 61% | Critical | Low | P0 - Immediate |

| XSS | 86% | High | Medium | P1 - 24 hours |

| Weak Auth | 52% | High | High | P1 - 24 hours |

| Outdated Deps | 34% | Variable | Low | P2 - 1 week |

This priority matrix helps teams focus on the most critical vulnerabilities first. SQL injection and hardcoded secrets should be fixed immediately due to their high impact and low fix effort. XSS and authentication flaws require more effort but are still critical. Dependency updates can be scheduled as part of regular maintenance.

Implementation Guide

Successfully defending against vulnerabilities in AI-generated code requires more than just knowledge—it requires systematic implementation of detection and remediation processes. Here's a practical guide to implementing these defenses in your organization.

Phase 1: Assessment (Week 1)

- Inventory AI Usage: Identify all teams and projects using AI code generation. Document which models are being used and for what purposes.

- Baseline Vulnerability Scan: Run comprehensive scans on existing AI-generated code to understand your current vulnerability landscape.

- Risk Assessment: Prioritize vulnerabilities based on exposure, data sensitivity, and business impact.

Phase 2: Quick Wins (Week 2-3)

- Deploy Secret Scanning: Implement pre-commit hooks to prevent hardcoded secrets from entering version control.

- Fix Critical SQL Injections: Use automated tools to identify and fix SQL injection vulnerabilities in production code.

- Update Dependencies: Run dependency audits and update packages with known vulnerabilities.

Phase 3: Systematic Improvement (Week 4-8)

- Implement Detection Pipeline: Deploy automated scanning in CI/CD to catch vulnerabilities before deployment.

- Establish Secure Patterns: Create and enforce secure coding patterns for common operations like database queries and authentication.

- Developer Training: Train developers on AI-specific vulnerabilities and secure prompting techniques.

Phase 4: Continuous Security (Ongoing)

- Monitor and Measure: Track vulnerability trends and remediation metrics to measure improvement over time.

- Update Detection Rules: Continuously update detection patterns as new vulnerability types emerge.

- Share Knowledge: Document lessons learned and share with the broader security community.

Best Practices and Prevention

Preventing vulnerabilities in AI-generated code requires a fundamental shift in how we approach AI-assisted development. Rather than treating AI as a trusted pair programmer, we need to treat it as a junior developer whose code requires careful review and validation.

Secure AI Development Principles

- Never Trust, Always Verify

Treat all AI-generated code as potentially vulnerable. Implement mandatory security reviews for AI-generated code, with extra scrutiny for authentication, database operations, and system commands.

- Defense in Depth

Layer multiple security controls: secure prompting, automated scanning, manual review, and runtime protection. No single defense is perfect, but multiple layers significantly reduce risk.

- Shift Security Left

Integrate security scanning into the IDE to catch vulnerabilities as code is generated. Don't wait for code review or deployment to find security issues.

- Continuous Learning

Stay updated on new vulnerability patterns as AI models evolve. What's secure today might be vulnerable tomorrow as models learn new patterns.

- Secure by Default

Configure AI assistants with security-focused system prompts. Provide secure code examples and patterns as context. Make the secure way the easy way.

Organizational Security Culture

Building a security-conscious culture around AI development requires leadership commitment and systematic change. Security must be everyone's responsibility, not just the security team's.

- Executive Support: Ensure leadership understands AI security risks and supports necessary investments in tools and training.

- Clear Policies: Establish clear guidelines for AI tool usage, including which tools are approved and what types of code can be generated.

- Regular Training: Conduct monthly security training focused on AI-specific vulnerabilities and secure coding practices.

- Incident Learning: When vulnerabilities are found, treat them as learning opportunities rather than blame events.

- Security Champions: Designate security champions in each team to promote secure AI development practices.

Future Considerations

The landscape of AI-generated code vulnerabilities is rapidly evolving. As models become more sophisticated, some vulnerability types may decrease while new ones emerge. Organizations must prepare for this evolution by building flexible, adaptive security programs.

Emerging trends we're monitoring include:

- Supply Chain Attacks: Malicious actors poisoning training data to introduce backdoors into AI models that generate vulnerable code on specific triggers.

- Adversarial Prompts: Sophisticated prompt injection attacks that bypass security controls to generate malicious code.

- Model Extraction: Attackers stealing proprietary models through repeated queries, potentially exposing training data containing secrets.

- Regulatory Compliance: New regulations requiring organizations to audit and secure AI-generated code, with potential liability for vulnerabilities.

Organizations that start building robust AI security programs now will be better positioned to handle these emerging challenges. The key is to build flexible systems that can adapt as the threat landscape evolves.

Key Takeaways

Remember:

- • Patterns are predictable: LLMs make the same mistakes repeatedly, making detection possible

- • Detection is automatable: Most vulnerabilities follow clear patterns that tools can identify

- • Prevention is cheaper: Fix vulnerabilities at generation time, not in production

- • Context matters: Understand why LLMs generate vulnerable code to prevent it

- • Continuous scanning: New vulnerabilities emerge as models evolve

The vulnerabilities discussed in this guide aren't theoretical—they're appearing in production code worldwide, right now. Every organization using AI code generation is at risk, but those who understand these patterns and implement systematic defenses can harness AI's productivity benefits while maintaining security.

The future of software development is AI-assisted, but it doesn't have to be insecure. By understanding how and why LLMs generate vulnerable code, implementing comprehensive detection systems, and building security-conscious development cultures, we can create a future where AI makes development both faster and more secure.

Next Steps

Ready to secure your AI-generated code? Start with these actionable resources and continue building your expertise with our comprehensive guides:

- Complete Guide to Securing LLM-Generated Code

Master the fundamentals of AI code security with our comprehensive foundation guide

- Prompt Injection and Data Poisoning: Defending Against LLM Attacks

Learn advanced defense strategies against sophisticated AI attacks

- DevSecOps Evolution: Adapting Security Testing for AI-Generated Code

Transform your DevSecOps pipeline to handle AI-specific vulnerabilities

- Prompt Engineering for Secure Code Generation

Learn how to craft prompts that generate secure code by default

Quick Start Checklist

- □Run vulnerability scan on existing AI-generated code

- □Deploy secret scanning in pre-commit hooks

- □Update all dependencies with known vulnerabilities

- □Implement SQL injection detection in CI/CD

- □Train developers on AI-specific vulnerabilities